Introduction: The AI Mobile App Development Revolution

In an already very competitive app market, artificial intelligence is quickly changing from a "nice to have" feature of mobile apps to a "must-have" feature. By 2026, consumers will not just like AI features in apps, they will expect them as standard features in mobile applications. This trend presents both a challenge and an opportunity for companies and developers using FlutterFlow, the growing visual app development platform.

This discussion will outline five key AI features that will be standard in mobile applications by 2026, and how to successfully implement them for FlutterFlow developers. If you are a startup CEO looking to ensure your new app experience is not outdated in a few years, or a developer wanting to add agility to your FlutterFlow knowledge, you will find these discussions valuable in future-proofing your application today.

1. Intelligent Personalization Systems

How Personalization Will Define the User Experience by 2026

The era of mobile applications created for everyone is gone. Several market studies have concluded that apps that provide personalized experiences produce customer retention rates that are 38% higher than apps that create generic experiences, and 25% higher revenue. By 2026, every user will expect their app to understand their preferences and needs, and respond to those preferences accordingly.

When we talk about personalized experiences, we are talking about more than just inserting a user's personal name. Advanced AI personalization systems will be able to analyze a user's behavior, including their interaction history, and their data to create meaningful personalized interactions. From a business perspective, providing a more personal and satisfying experience to users will provide a huge competitive advantage using personalization systems in FlutterFlow that would otherwise be commonplace in a few years.

Incorporating AI Personalization into FlutterFlow

There are a number of ways to create personalization features in FlutterFlow without extensive coding experience. The best implementation of AI personalization is through utilizing FlutterFlow's native features as well as planned integrations to external AI services to power personalization.

You should first build out a comprehensive user profile using FlutterFlow with the Firestore database. As you design your database it will be helpful to formulate a design that will store user preferences, behavior patterns, and interaction history. All of this is needed to make personalization meaningful.

You will want to incorporate the personalization logic using a combination of FlutterFlow's built-in conditional visibility features and custom functions. You should consider being able to utilize dynamic content blocks; dynamic content blocks could be user segments that personalize the content based upon a user's preferences. For more sophisticated advanced personalization, you could integrate with services like Firebase Remote Config and control personalization settings on the server side.

The real magic happens when you connect your FlutterFlow app directly to specific personalization APIs. Many services, such as Google's Recommendations AI can be integrated directly through FlutterFlow's HTTP API actions. These services have access to advanced recommendation algorithms that would be difficult to develop from scratch, and you will have to refer to developer documentation to understand how to integrate them properly.

When you implement a personalization system in your app it is essential that you test it to optimize for effectiveness. Use the preview function in FlutterFlow to experience the personalization as if you are from different user perspectives. You will also want to implement analytics tracking to learn about the effect of personalization on your app's key metrics of engagement time, conversion rate, and user retention.

2. Generative AI Integration with Leading Models

How ChatGPT, Gemini, and Claude Are Changing Mobile Apps

By 2026, the integration of generative artificial intelligence models such as OpenAI's ChatGPT, Google's Gemini, and Anthropic's Claude will clearly be a major standard in intelligent mobile applications. These large language models (LLMs) are prominently changing how we conceive of content creation, problem-solving execution, and user interaction in apps.

The capabilities of these models with respect to mobile applications are truly game-changing: delivering personalized content on demand, creating nuanced and complex responses to queries, generating images and designs based upon textual input, solving multi-step problems, and adapting communication styles to align with user preferences.

The early evidence from those pioneers already implementing this technology gives instant insights: 53% increase in engagement time, 41% increase in task completion rates, and 37% increase in satisfaction scores compared to apps without generative AI features. Clearly, by 2026, users will expect this.

How to Add Generative AI to Your FlutterFlow App (In Simple Terms)

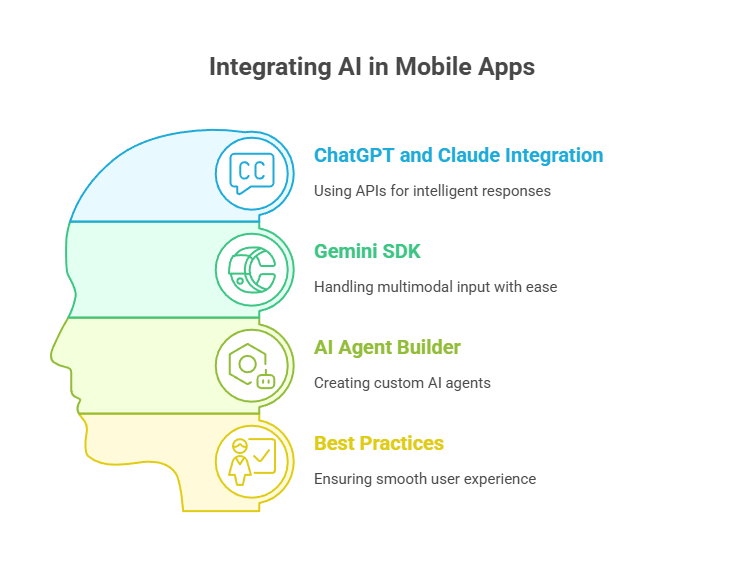

FlutterFlow has made it much easier to build smart apps by adding support for powerful AI features. Even if you're not a technical expert, you can now connect your app to advanced AI models like ChatGPT, Claude, and Gemini. FlutterFlow lets you do this using simple tools like HTTP API calls, the Gemini SDK, and a new AI Agent Builder. Here's how each one works and how you can use them in your app.

1. Connecting ChatGPT and Claude Using API

If you want to make your app respond intelligently to users (like answering questions or giving suggestions), you can use OpenAI's ChatGPT or Anthropic's Claude. These are both powerful AI tools, and you can connect them to your FlutterFlow app using HTTP API calls.

To do this, you first get an API key from OpenAI or Anthropic. Then, inside FlutterFlow, you use the API Call feature to send a request to their servers with the user's message. The AI will respond with a smart answer, which you can show in your app.

You can also control how the AI behaves by adjusting settings like:

- Temperature – How creative the AI's response is

- Max Tokens – How long the response can be

- System Message – Tells the AI what role it should play (like a teacher, doctor, or travel agent)

To make the AI remember previous chats, you can store part of the conversation in Firestore, which connects easily to FlutterFlow.

Example Use Cases:

- An AI tutor answering student questions

- A fitness coach giving advice

- A customer support bot in an e-commerce app

2. Using Gemini with Built-in SDK

FlutterFlow now has a built-in Gemini SDK, which means you don't need to do any extra coding or setup to use Google's Gemini AI model. This makes it very easy to use.

The special thing about Gemini is that it can handle multimodal input — this means it can understand both text and images. For example, a user could upload a photo of a product or a plant and then ask a question about it. Gemini will analyze the image and give a helpful response.

Example Use Cases:

- In a shopping app, users can ask questions about a product by uploading its photo

- In a farming app, users can upload a picture of a leaf and ask if the plant is healthy

We have also built a multilingual AI farming app called FarmGPT using FlutterFlow. This app helps farmers ask questions about plants, animals, or diseases in multiple languages, and get instant advice. It uses AI to scan images, answer questions, and even give suggestions on how to treat crop issues. FarmGPT was selected as a winning entry in the FlutterFlow AI Hackathon 2024, and shows what's possible when AI and FlutterFlow are combined smartly.

Best Practices to Keep in Mind

When using AI in your app, it's important to give users a smooth experience. Here are a few tips:

- Show a loading screen while the AI is thinking or processing the answer

- Handle errors properly. If the API fails or times out, show a simple error message to the user

- Cache common questions and answers, so you don't send too many API requests. This saves cost and speeds up your app

- Be careful with user data. Don't send private information to AI services without clear user consent

Thanks to FlutterFlow's updates, adding AI to your app is now simple and powerful. Whether you want to build a chatbot, use image analysis, or create a personal assistant, you have all the tools you need — even if you're not an expert developer.

The combination of HTTP API calls, the Gemini SDK, and the AI Agent Builder gives you many ways to create smart, helpful, and engaging apps for real-world use. If you're building in FlutterFlow, now is the perfect time to start adding AI features to make your app stand out.

3. AI Agents: Creating Task-specific Assistants with FlutterFlow

Why AI Agents Will Transform Apps by 2026

By 2026, AI agents—specialized AIs that perform specific tasks autonomously—will be standard elements in advanced mobile apps. These agents are more than chatbots - they're capable assistants that can perform a series of actions for the user.

The business impact of effective AI agents is impressive. Apps with effective AI agents have 43% less time taken for user tasks, 38% fewer requests for customer support, and 29% higher ratings from user satisfaction surveys. This will directly lead to higher retention rates and lower operational costs.

With the advent of AI agent capabilities that FlutterFlow recently launched, this technology is accessible to Flutter developers. Now the playing field has opened for businesses so any company can now deploy sophisticated autonomous capabilities that previously required substantial resources and expertise.

Building Effective AI Agents with FlutterFlow's New Capabilities

Being able to define the tasks associated with AI agent development using FlutterFlow tools means you will start with the right steps to develop your task-specific intelligent assistant in your business applications. FlutterFlow's tools will easily allow you to define agent abilities and components, connect to required data sources and apply task-specific logic without extensive coding.

You should start with the basics of defining what the AI agent should do - and consider the scope of the agent. All successful AI agents operate in specific areas, rather than trying to be all things to all users. For example, you might want to create a booking agent (to schedule appointments), a support agent (to see if a common issue can be troubleshot), or a shopping agent (to help a user find and purchase available products). Using FlutterFlow's agent builder, you will define the tools your agent will use. These might include database queries, API calls, math functions, or other custom functions. Once you have defined the tools, you will be creating the building blocks your agent will use to perform its tasks.

Next, implement your agent's knowledge, using FlutterFlow's vector database. The knowledge, or knowledge base for your agent, is the information you will use to provide answers or take action to respond to users' questions or tasks. You will populate this database with all information that is relevant to your agent, which might be about your products, services, policies or any other domain knowledge to adhere to.

At the very core of agent development is creating the action flow - the journey or series of steps your agent will take to complete a user's task. The visual editor in FlutterFlow allows you to build your action flows conveniently, without having to write code and form links between the user inputs and expected responses or follow through with taking action or giving an appropriate response. As you author these interactions, you will be building flow diagrams, or decision trees, where your agent takes different actions based on user inputs.

Testing is a critical aspect of effective agent development. Use the testing tools in FlutterFlow to simulate conversations and requests, and identify edge cases and failure points. You should also use logging to keep track of how your agent is performing - including what users might think or how satisfied they are - and create a feedback loop for continuous improvement.

If you are developing a 'power' agent you should also implement handoff protocols that facilitate the transition between using an agent and human assistance. Any situation that is beyond the agent's capabilities can lead to user frustration and sometimes dissatisfaction when they may perceive that the agent is not capable or supportive. It is worth building a system for your agent to be able to route or complete the majority of your basic requests easily.

4. Visual Intelligence and AR

How Visual AI Will Change Mobile Apps by 2026

By 2026, visual intelligence functions—such as image recognition, object detection, and augmented reality—will be standard in mobile apps across industries. These capabilities are already blurring the boundaries between digital and physical experiences, enabling new dimensions of user engagement.

The bottom-line influence of visual AI is significant. For example, retail apps with virtual try-on functions report as much as a 40% conversion rate increase. Real estate apps with AR visualization report a 3.2x increase in engagement time. As the novelty of these innovative components wears off, they will transition from exciting features to taking their place as drivers of competitive mobile apps, creating innovative opportunities that benefit users and organizations.

Implementing Visual Intelligence in FlutterFlow Apps

FlutterFlow has, surprisingly, several options to implement visual intelligence capability because of its integration capabilities with specialist services and APIs.

Simple image recognition will be the easiest place to start, using integration with services, such as Google Cloud Vision, or Azure Computer Vision, using API integration. With these services, your app will be able to detect objects in images, read text, find faces in images, and be "computationally aware" of what the content is doing in the images or how to interpret recognized text. These capabilities will allow you to do visual search, content moderation, or automatic tagging.

For augmented reality functions, you can build on top of FlutterFlow's integration options with AR frameworks. FlutterFlow is not a native development platform for AR, but you can create web-based AR experiences using their integration options with WebXR or connect to native AR capabilities using integration with custom code functions. You can leverage visual intelligence with AR experiences to create product previews, provide spatial mapping and/or interactive guides.

Always consider how to enhance user experience through smart loading and processing of visual information. Always design your interfaces to provide real-time or immediate visual processing feedback while also processing visual information in the background. With FlutterFlow, you can leverage state management to control loading states and how information is displayed, if it makes sense to show this to the user.

Keep in mind that visual intelligence functions will usually require permission for camera access and will require a fair amount of user guidance. Therefore, think carefully about designing the permission request and creating visual indicators that clearly indicate to the user how to interact with the functions you embed using visual intelligence.

5. AI-Powered Security and Authentication

The Importance of AI Security for Mobile Apps in 2026

By 2026, due to growth in security concerns, AI-backed protections for mobile applications will become an absolute necessity. As digital threats continue to become more sophisticated, traditional security will simply not suffice in protecting user data and privacy.

AI-based security features will analyze patterns and detect anomalies, and assess potential threats before they cause harm and damage. AI systems will also adapt to new attack vectors compared to security that uses static measures in place where security is static and unable to adapt as quickly to evolving threats. For users, these features will provide comfort and protection without much friction for the end user experience.

Different Ways to Implement Advanced Security Features with FlutterFlow

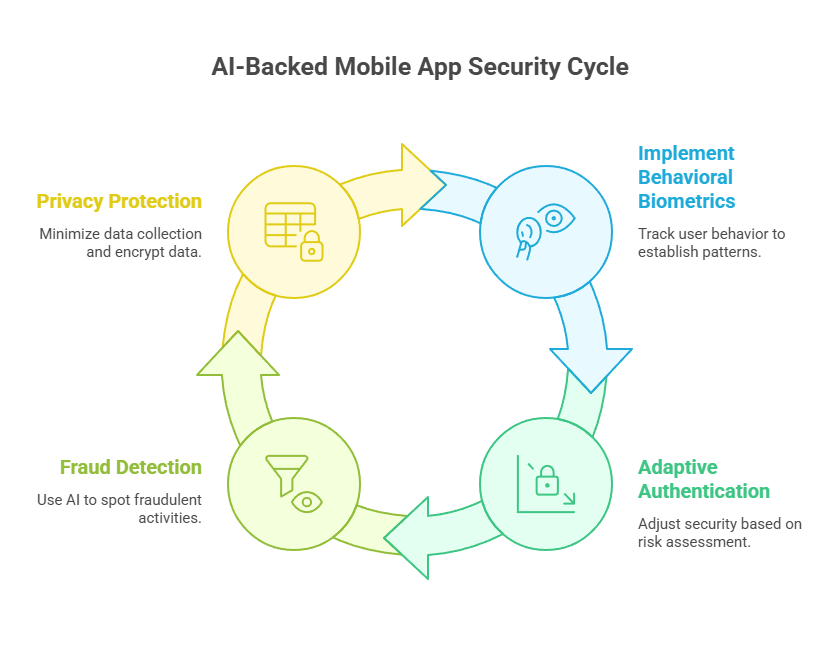

FlutterFlow provides several options for implementing AI-backed security features which will protect your users and data.

Start with behavioral biometrics with non-intrusive user behavior tracking to establish normal usage patterns. This will allow your app to notice potentially suspicious activity depending on previously established patterns. Use FlutterFlow's event tracking and execution of custom functions for easier behavioral monitoring systems.

Implement adaptive authentication which will change what security needs to be provided based on risk assessment. You could use FlutterFlow's conditional logic to request more verification steps like logging in from a new device or different location. You will provide security without causing more friction for legitimate users.

When it comes to fraud detection for transactional apps, you could leverage existing services dedicated to AI security through API connections in FlutterFlow to implement your security features. Such services look at transaction trends and user behavior to spot potentially fraudulent activity before it can happen.

Privacy protection measures are also critical. Use the FlutterFlow database rules and security capabilities to incorporate data minimization principles. Approach the application design for mobile apps to collect only the data that is required, and use proper methods for anonymization and encryption of sensitive data.

Conclusion: Getting Your FlutterFlow App Prepared for the Future

The five AI features discussed in this article—intelligent personalization, generative AI, agents, visual intelligence, and AI security—will be the drivers of successful mobile applications by 2026. For FlutterFlow developers, there are considerable advantages to implementing these features today that will only become more pronounced over time.

Although these AI features may seem complex, they are easy to design and integrate with the extensive capabilities of FlutterFlow, even if the developer never learns to code or lacks data science experience. Developers can use third-party API connections and custom code blocks to connect external AI services to leverage their capability to create sophisticated AI-powered applications.

The focus should be on how to integrate these features rather than simply adding more features for their own sake. The priority should be on solving user problems to create more core value from the application itself. Once the performance impacts are understood and operated to adjust for mobile-specific variables such as battery use and data consumption, then efforts can focus on providing as much value as possible for the user.

Accordingly, by integrating these AI capabilities into your FlutterFlow app(s), remember that the technology should be invisible to the user. The very best examples of AI integrations feel natural and helpful, whereas less than brilliant examples feel forced or gimmicky. Focus on value to the user, as it is easier to exceed user expectations by 2026, than merely meeting them.

Are you ready to evolve your FlutterFlow app with these AI features? The mobile future is intelligent, and the time is now to begin building that intelligence.

.png)

.png)

.png)